DensePure: Understanding Diffusion Models towards Adversarial Robustness

Abstract

Diffusion models have been recently employed to improve certified robustness through the process of denoising. However, the theoretical understanding of why diffusion models are able to improve the certified robustness is still lacking, preventing from further improvement. In this study, we close this gap by analyzing the fundamental properties of diffusion models and establishing the conditions under which they can enhance certified robustness. This deeper understanding allows us to propose a new method DensePure, designed to improve the certified robustness of a pretrained model (i.e. classifier). Given an (adversarial) input, DensePure consists of multiple runs of denoising via the reverse process of the diffusion model (with different random seeds) to get multiple reversed samples, which are then passed through the classifier, followed by majority voting of inferred labels to make the final prediction. This design of using multiple runs of denoising is informed by our theoretical analysis of the conditional distribution of the reversed sample. Specifically, when the data density of a clean sample is high, its conditional density under the reverse process in a diffusion model is also high; thus sampling from the latter conditional distribution can purify the adversarial example and return the corresponding clean sample with a high probability. By using the highest density point in the conditional distribution as the reversed sample, we identify the robust region of a given instance under the diffusion model's reverse process. We show that this robust region is a union of multiple convex sets, and is potentially much larger than the robust regions identified in previous works. In practice, DensePure can approximate the label of the high density region in the conditional distribution so that it can enhance certified robustness. We conduct extensive experiments to demonstrate the effectiveness of DensePure by evaluating its certified robustness given a standard model via randomized smoothing. We show that DensePure is consistently better than existing methods on ImageNet, with 7% improvement on average.

Theroetical Contributions

In this paper, we provide theroetical analysis about the ability of diffusion models to improve certified robustness. Our main contributions are as the following: (i) explain why and how the diffusion model purifies adversarial attacks to improve the adversarial robustness; (ii) derive the robust region and robust radius of diffusion models, which has the potential to provide a large robust region.

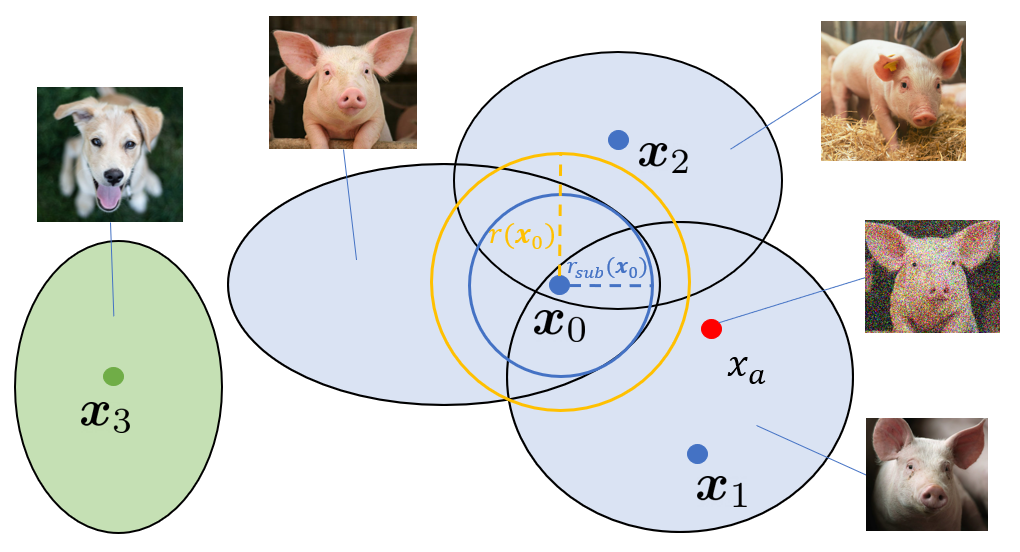

An illustration of the robust region, where x0, x1, x2 are samples with ground-truth label and x3 is a sample with another label. xa = x0 + εa is an adversarial sample with correct classification but it could not be reversed back to x0. The blue and green shades are recovery regions where noisy samples can be reversed back to their original ones by diffusion models. When we have a data region with higher data density and larger distances to data regions with other labels like the blue shade in the picture, we can gain a larger robust radius like r(x0) other than the radius rsub(x0) which is restricted in the recovery region of x0. Thus, even if xa is not within robust radius rsub(x0), if it lies in the robust region of other samples with ground-truth label, it can still be successfully purified

DensePure Framework

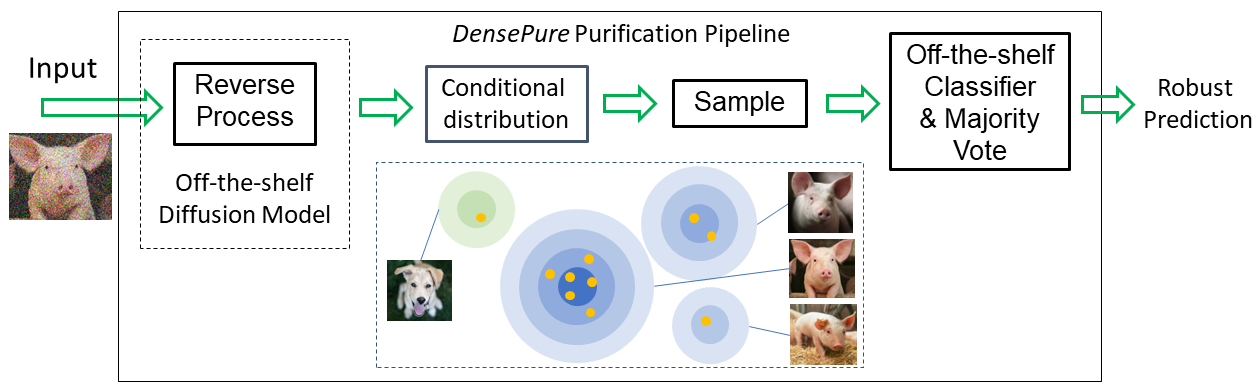

Theroetical analysis on diffusion models allows us to propose a new method DensePure to improve the certified robustness of any given classifier by more effectively using the diffusion model. DensePure incorporates two steps: (i) using the reverse process of the diffusion model to obtain a sample of the posterior data distribution conditioned on the adversarial input; and (ii) repeating the reverse process multiple times with different random seeds to approximate the label of high density region in the conditional distribution via a majority vote. In particular, given an adversarial input, we repeatedly feed it into the reverse process of the diffusion model to get multiple reversed examples and feed them into the classifier to get their labels. We then apply the majority vote on the set of labels to get the final predicted label.

Pipeline of DensePure

Main Results

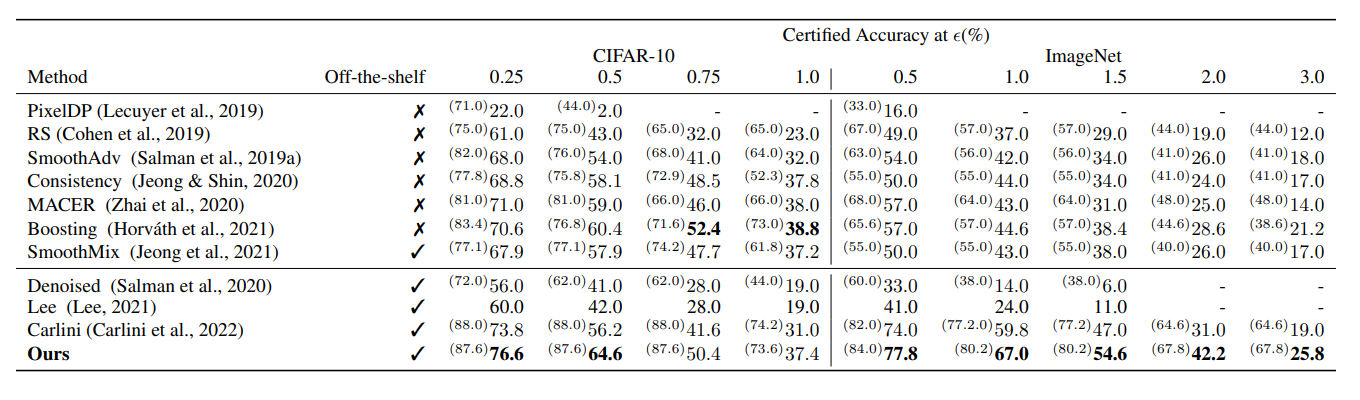

We empirically compare our methods against other certified robustness baselines under randomized smoothing, especially Carlini et al. (2022), which is also an off-the-shelf method with diffusion models. Extensive experiments and ablation study on CIFAR-10 and ImageNet demonstrate the state-of-the-art performance of DensePure.

- Compared with existing works. Compared to both on-the-shelf and off-the-shelf existing randomized smoothing methods, our method shows large improvement on CIFAR-10 in most cases and consistently better than existing methods on ImageNet, with 7% improvement on average.

Certified accuracy compared with existing works. The certified accuracy at σ = 0 for each model is in the parentheses. The certified accuracy for each cell is from the respective papers except Carlini et al. (2022). Our diffusion model and classifier are the same as Carlini et al. (2022), where the off-the-shelf classifier uses ViT-based architectures trained on a large dataset (ImageNet-22k). Comparison with state-of-the-art adversarial training methods against AutoAttack on ImageNet with ResNet-50 and DeiT-S, respectively.

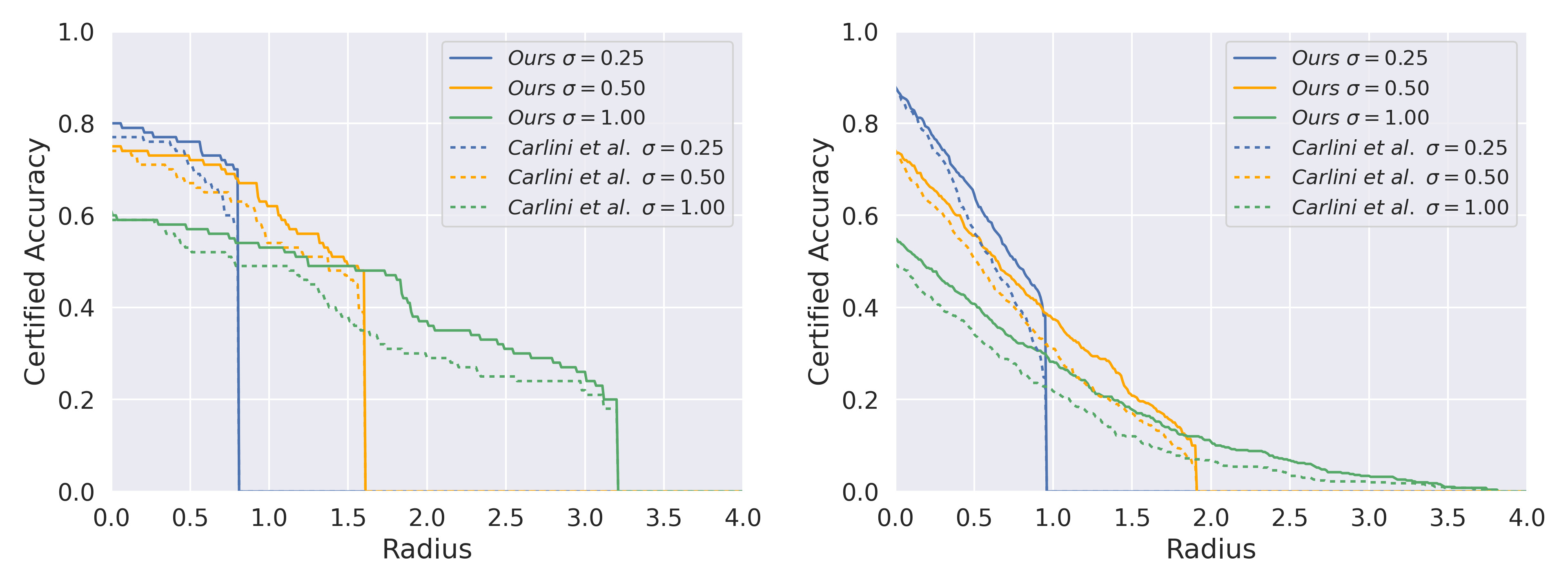

- Compared with Carlini et al. (2022). To better understand the importance of DensePure design, that approximates the label of the high density region in the conditionaldistribution, we compare DensePure in a more fine-grained manner with Carlini et al. (2022), which also uses the diffusion model. Experiment results show that our method is consistently better than Carlini et al. (2022) to reach higher certified robustness.

Comparing our method vs Carlini et al. (2022) on CIFAR-10 and ImageNet. The lines represent the certified accuracy with different L2 perturbation bound with different Gaussian noise σ ∈ {0.25, 0.50, 1.00}.

Our Insights and Limitations

In this work, we theoretically prove that the diffusion model could purify adversarial examples back to the corresponding clean sample with high probability, as long as the data density of the corresponding clean samples is high enough. Our theoretical analysis characterizes the conditional distribution of the reversed samples given the adversarial input, generated by the diffusion model reverse process. Using the highest density point in the conditional distribution as the deterministic reversed sample, we identify the robust region of a given instance under the diffusion model reverse process, which is potentially much larger than previous methods. Our analysis inspires us to propose an effective pipeline DensePure, for adversarial robustness. We conduct comprehensive experiments to show the effectiveness of DensePure by evaluating the certified robustness via the randomized smoothing algorithm. Note that DensePure is an off-the-shelf pipeline that does not require training a smooth classifier. Our results show that DensePure achieves the new SOTA certified robustness for perturbation with L2-norm. We hope that our work sheds light on an in-depth understanding of the diffusion model for adversarial robustness.

One main limitation of our method is the time complexity, because DensePure requires repeating the reverse process multiple times. In this paper, we use fast sampling to reduce the time complexity and show that with reduced sampling and majority votes numbers, we can still achieve nontrivial certified accuracy. We leave the more advanced fast sampling strategy as the future direction.

Paper

Diffusion Models for Adversarial Purification

Chaowei Xiao, Zhongzhu Chen, Kun Jin, Jiongxiao Wang, Weili Nie, Mingyan Liu, Anima Anandkumar, Bo Li, Dawn Song

Citation

@article{xiao2022densepure,

title={DensePure: Understanding Diffusion Models towards Adversarial Robustness},

author={Xiao, Chaowei and Chen, Zhongzhu and Jin, Kun and Wang, Jiongxiao and Nie, Weili and Liu, Mingyan

and Anandkumar, Anima and Li, Bo and Song, Dawn},

journal={arXiv preprint arXiv:2211.00322},

year={2022}

}